Excel can only open spreadsheets with fewer than 1,048,576 rows.

The websites that I have been working with lately are getting increasingly bigger and more complex. It doesn’t seem that long ago that the client websites I worked with were rarely larger than a few thousand pages. That was back in the day when the clients I saw were like a startup who invented a pillow that is also a desk, or an eCommerce store that was like Bed Bath and Beyond for camouflage stuff.

As websites have gotten bigger and more complex, I’ve had to upgrade my day to day process to accommodate more data. Not long ago the type of work I was used to doing shifted, and it was rare for me to get a project that didn’t involve working with Screaming Frog files that are longer than 1 million URLs. This is how I learned to do it.

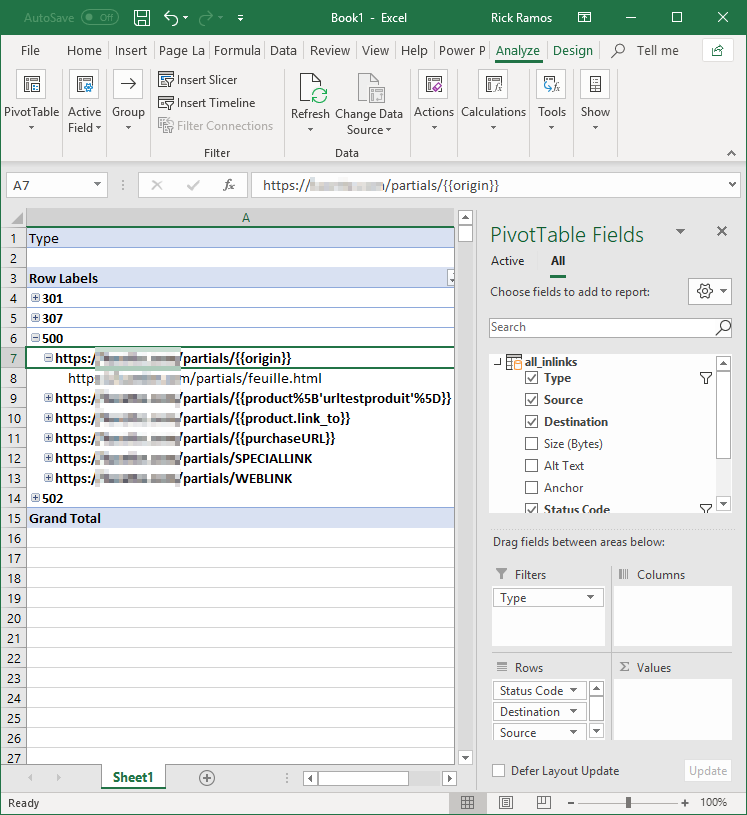

One of the files I use all the time is the all_inlinks export from Screaming Frog. This file can be ridiculously big because it is an archive of:

- Every internal link to every file in the crawl, including…

- AHREF

- JS

- CSS

- Images

- etc. etc. etc.

- Status codes

- Page location of every internal link

- The anchor text/alt attributes

- Other stuff I don’t typically use

The all_inlinks file has a ton of helpful stuff in it, but to read it I break this data into manageable chunks with a pivot table. Although my pivot table may have far fewer than 1M rows, I can’t open the file without truncating everything at line 1,048,577. It turns out that the trick is to load crawler data into an Excel Data Model.

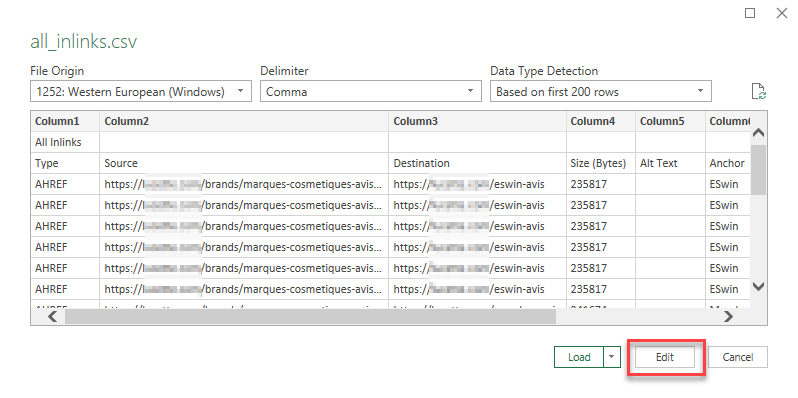

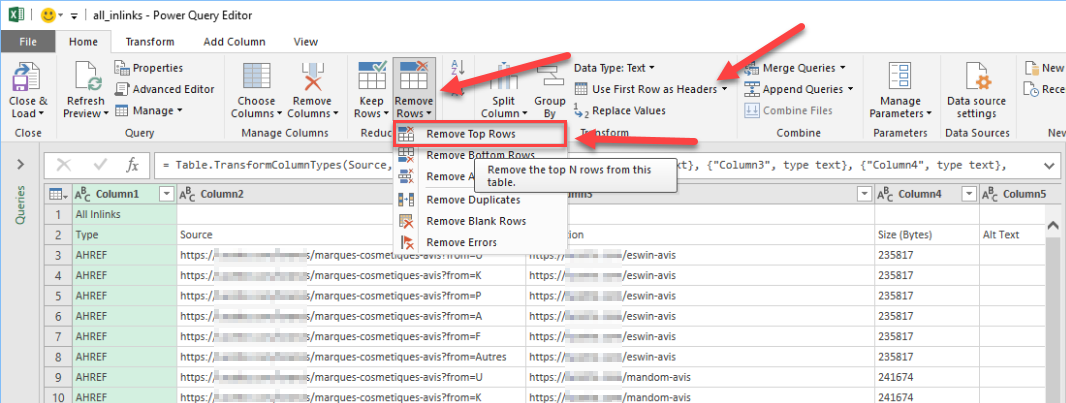

Excel will typically use the first column as headers, but Screaming Frog adds the title of the export in the first row. Once the CSV is loaded, the top row needs to be removed, and the new top row will be converted to column headers. After that quick fix, the rest of the process is pretty straightforward.

Loading crawler data into an Excel Data Model

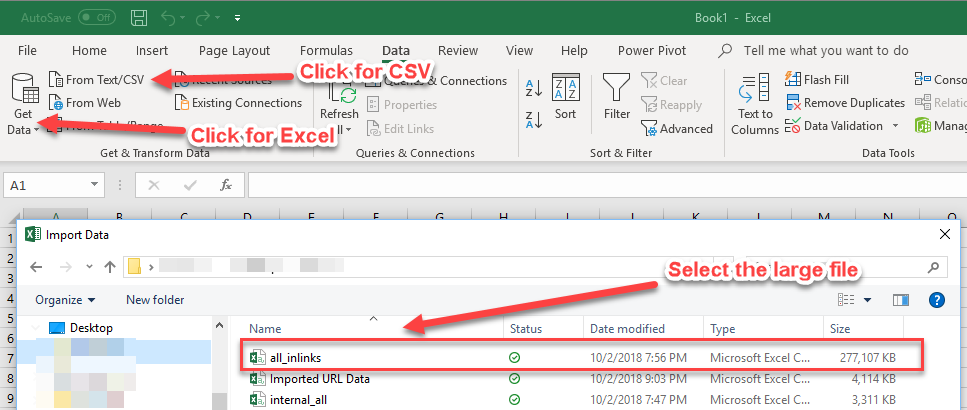

- Open a new Excel Worksheet (.xlsx) and click Data at the top o the page.

- Click From Text/CSV (or Get Data if the other file is another .xlxs, .xml, JSON, etc.)

- Select all_inlinks.csv and click Open

- On the next screen click Edit, which will bring you to the Excel Power Query Editor

- Click Remove Rows > Remove Top Rows

- Type 1 into the popup box and click Ok (not pictured)

- Click Use First Row as Headers

(You can do a lot of other helpful stuff in Power Query Editor too, but let’s just start with this for now.)

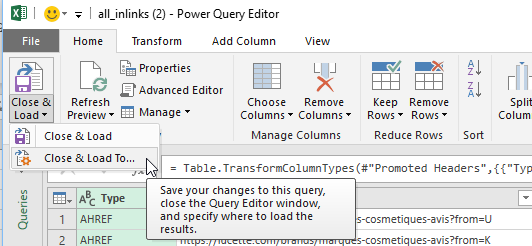

- Click Close & Load To…

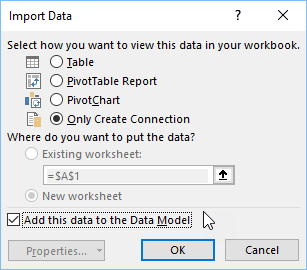

- Click Only Create Connection, check Add this data to the Data Model, and click OK

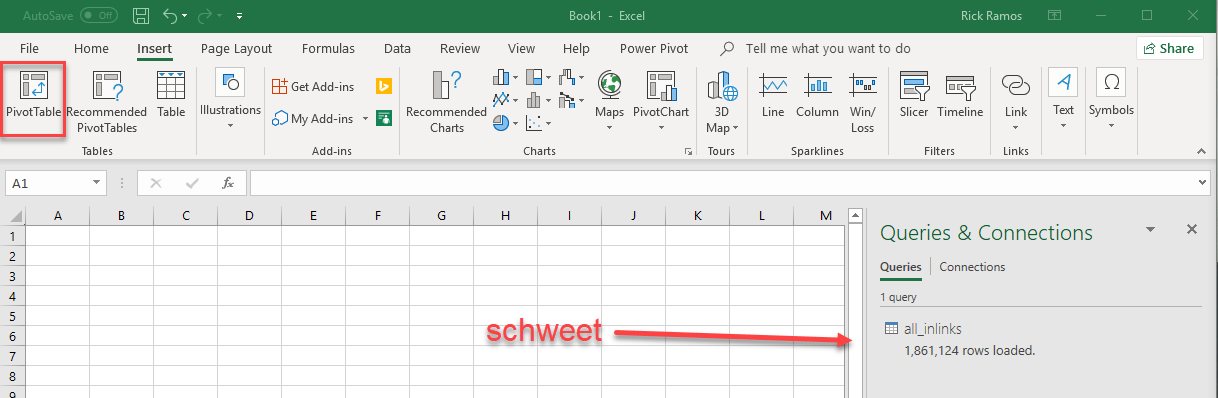

At this point, all of the CSV lines have been added, behind the scenes, so that they can be inserted into pivot tables.

When you click on the all_inlinks file in the Pivot Table Fields box, it will expand to show the column headers. You can use these to make pivot tables like you would from the original CSV file exported from Screaming Frog.

Great post, Rick. This is interesting but as usual, the plugin is only available for Windows. Any alternatives for us, the macOS, users?

Thanks a lot, Kaludio.

Unfortunately, I don’t know how to use Power Query on Mac. My best advice is to run it on a virtual machine. Good luck!